- TORCH NN SEQUENTIAL GET LAYERS HOW TO

- TORCH NN SEQUENTIAL GET LAYERS PATCH

- TORCH NN SEQUENTIAL GET LAYERS CODE

MNIST ( root = './data', train = False, transform = transforms. ToTensor (), download = True ) test_dataset = dsets. MNIST ( root = './data', train = True, transform = transforms. Import torch import torch.nn as nn import ansforms as transforms import torchvision.datasets as dsets ''' STEP 1: LOADING DATASET ''' train_dataset = dsets. Multiple Pooling Layers: High Level View ¶Įxample 1: Output Dimension Calculation for Valid Padding ¶ Multiple Convolutional Layers: High Level View ¶ Can capture more information about the input.More kernels \(=\) more feature map channels.

TORCH NN SEQUENTIAL GET LAYERS PATCH

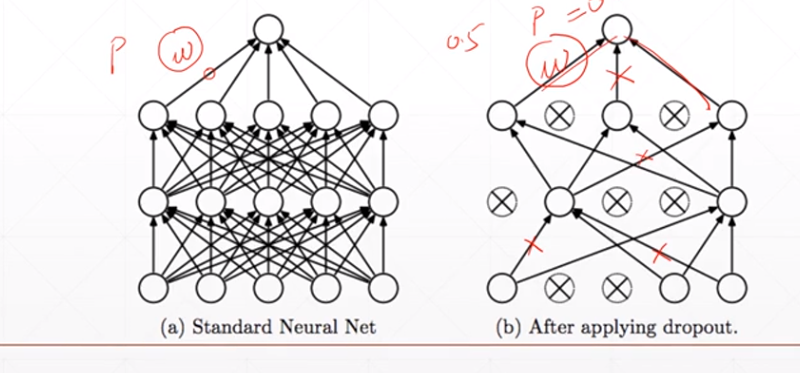

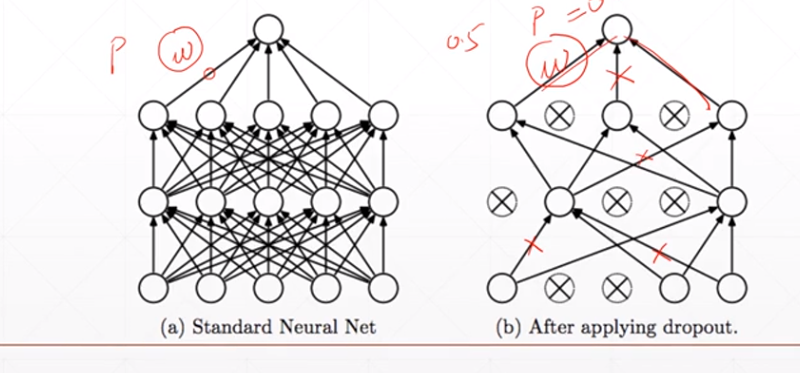

As the kernel is sliding/convolving across the image \(\rightarrow\) 2 operations done per patch. One Convolutional Layer: High Level View Summary ¶ One Convolutional Layer: High Level View ¶ NVIDIA Inception Partner Status, Singapore, May 2017Ī layer with an affine function & non-linear function is called a Fully Connected (FC) layer NVIDIA Self Driving Cars & Healthcare Talk, Singapore, June 2017 NUS-MIT-NUHS NVIDIA Image Recognition Workshop, Singapore, July 2018 Recap of Facebook PyTorch Developer Conference, San Francisco, September 2018įacebook PyTorch Developer Conference, San Francisco, September 2018 NExT++ AI in Healthcare and Finance, Nanjing, November 2018 IT Youth Leader of The Year 2019, March 2019ĪMMI (AIMS) supported by Facebook and Google, November 2018 Oral Presentation for AI for Social Good Workshop ICML, June 2019 Markov Decision Processes (MDP) and Bellman Equationsįractional Differencing with GPU (GFD), DBS and NVIDIA, September 2019ĭeep Learning Introduction, Defence and Science Technology Agency (DSTA) and NVIDIA, June 2019 Supervised Learning to Reinforcement Learning (RL) Weight Initialization and Activation Functions Long Short Term Memory Neural Networks (LSTM)įully-connected Overcomplete Autoencoder (AE)įorward- and Backward-propagation and Gradient Descent (From Scratch FNN Regression)įrom Scratch Logistic Regression Classification Building a Convolutional Neural Network with PyTorch (GPU) General Deep Learning Notes on CNN and FNNģ. Multiple Convolutional Layers: High Level ViewĮxample 1: Output Dimension Calculation for Valid PaddingĮxample 2: Output Dimension Calculation for Same Paddingīuilding a Convolutional Neural Network with PyTorch One Convolutional Layer: High Level View Summary Would be cleaner if I could just specify Sequential("x, edge_index, return_attention_weights") and conv(x, edge_index, True) without having to specify Size=None since it is defaulted to None in GATConvīeta Was this translation helpful? Give feedback.Transition From Feedforward Neural Network For instance, seems I have to be specify Size in both Sequential and in the forward pass for each conv layer. TORCH NN SEQUENTIAL GET LAYERS HOW TO

However, open to suggestions on how to make this cleaner.

TORCH NN SEQUENTIAL GET LAYERS CODE

I think this works well but wondering if there is a better way?Įdit - I figured it out quickly after posting and thus edited the code posted in this discussion. "x, edge_index, size, return_attention_weights -> x, a", GATConv(in_channels, 10, heads=2, dropout=0.6), "x, edge_index, size, return_attention_weights",

0 kommentar(er)

0 kommentar(er)